Fake or Real? Detecting AI Images from a Forensic Lens

This content is also publishing in Forensic Magazine. The research findings were initially discussed during the Techno Security & Digital Forensics Conference in June 2024.

When explicit deepfakes of Taylor Swift flooded the internet, it was a stunning and eye-opening moment. If the biggest popstar in the world can be a victim of sexual exploitation – any of us can. The social ramifications of generative AI used for horrible acts are already here – now society must come to grips with it and lawmakers must act quickly.

It is encouraging the Department of Justice recently arresting a Wisconsin software engineer who’s accused of creating AI-generated child sexual abuse material (CSAM), stating that since the technology learned from real images, it’s the same crime. Only about a dozen states have laws on the books with any prosecution power to go after bad actors generating this harmful, explicit material. We continue to hang our head when we hear our law enforcement partners say they brought a case to the District Attorney who told them there was no way to prosecute it.

AI image generator app. Person creating photo art with Artificial Intelligence software in computer laptop.

As lawmakers wake up and quickly put laws in place that hold these perpetrators accountable, we conducted research to look at what these images look like from a forensic lens so when it is time to build a case, law enforcement will have the information they need to find the evidence necessary to hold offenders accountable.

How Generative AI Works

Generative AI is exactly what it sounds like – it’s designed to generate new content. The new content is created based on the patterns learned from data that the user inputs and it mimics the style and structure of original content.

These machines improve performance with time – called machine learning – with more accurate data to learn from. And it generates new data based upon the inputs, including text, images and more. Essentially – it’s learning the data and predicting and deciding for you.

With the naked eye, you are hard pressed to tell if an image is fake or real. Yet victim statements combined with the suspect device in question, you’re well on your way to understanding its manufacturer.

Focus on What is Missing

Spoiler alert – from a forensic lens, there is no glaring red flag. Yet, there are key things that are missing in an AI image, which will give you a great starting point in your investigation.

One file that will be critical in investigations is a JPEG Universal Metadata Box Format (JUMBF). Learn it and understand it. It is a standard for embedding metadata in JPEG files, allowing various types of information to be stored within a single file in a structured and standardized way. You’ll have your binary container format, and you’ll want to seek out the C2PA (Content Provenance and Authenticity), which cryptographically binds metadata with hashes to a specific piece of media.

Our Findings

In general – the file size of an AI image is generally smaller – but you can’t just use that to identify it. Still, it’s a nice marker to start looking in that direction.

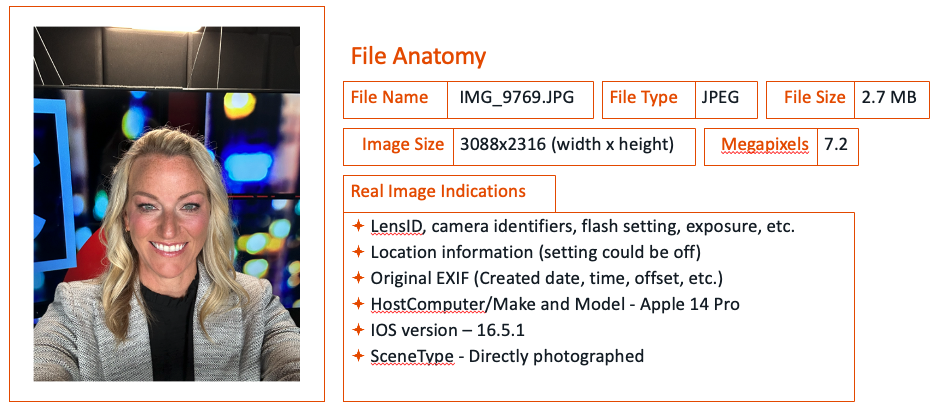

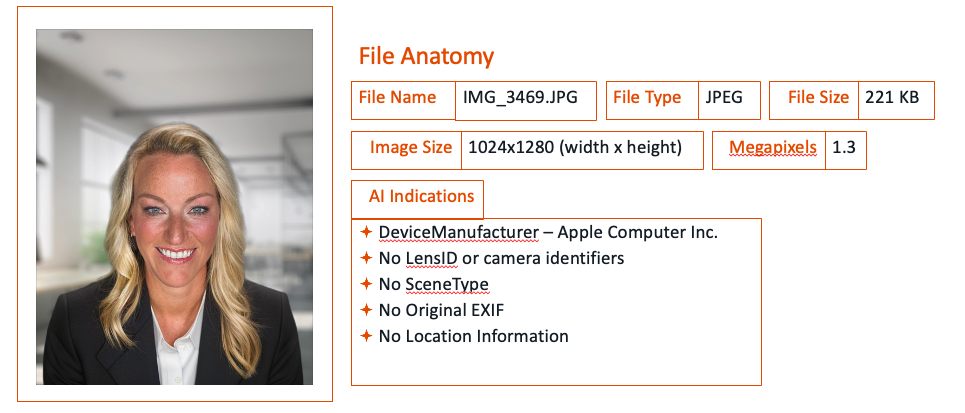

When comparing the AI image to a real image, real images give everything. Here’s an example of a real image of Heather on the left and the AI one on the right.

The real image had everything:

- LensID, camera ID, flash setting, exposure, etc.

- Location information (note the setting could be switched off)

- Original EXIF (created date, time, offset, etc.)

- HostComputer/Make and Model – Apple 14 Pro

- iOS version – 16.5.1

- SceneType – Directly photographed

In the AI image, the following data was missing:

- DeviceManufacturer – Apple Computer Inc.

- No LensID or camera identifiers

- No SceneType

- No Original EXIF

- No Location Information

To summarize: file path, file size and other metadata that is typical for images, file names, etc. are missing in AI images – as if the device has no idea where it came from.

Always Rely on Traditional Digital Forensics Investigative Work

- Start with the basics: Benchmark what’s normal for the device. How does it behave? What should the files look like?

- Use timelining: As you would in any case, take a close look at what was happening around the time of the file’s creation. What was used? What is missing?

- Follow the Money: Good old fashioned detective work always comes into play in digital investigations. There are free AI-generation tools out there but the saying “you get what you pay for” carries true here. For those creating sophisticated images, you really need to pay for the AI tool. The payment to the AI service is a great investigative track to follow.

When we started this research, we were so focused on what we would find. In the end – it really is about what is missing.

This should give you a strong foundation to build your investigation and here is hoping that laws are on the books that will allow you to build a strong case to hold the image creator accountable.

About the Authors

Heather Barnhart is the Senior Director of Community Engagement at Cellebrite, a global leader in premier Digital Investigative solutions for the public and private sectors. She educates and advises digital forensic professionals on cases around the globe. For more than 20 years, Heather’s worked on high-profile cases, investigating everything from child exploitation to Osama Bin Laden’s digital media.

Jared Barnhart is the Customer Experience Team Lead at Cellebrite, a global leader in premier Digital Investigative solutions for the public and private sectors. A former detective and mobile forensics engineer, Jared is highly specialized in digital forensics, regularly training law enforcement and lending his expertise to help them solve cases and accelerate justice.